AI Engineering Roadmap For 2026

The skills you actually need to get hired. A practical, industry backed path to becoming a real AI engineer

Hey friends - Happy Tuesday!

There is a lot of noise around AI engineering, and most of it makes the journey look harder than it really is. And to be honest, most of the “AI Engineering” roadmaps you see online are actually Data Science roadmaps with a different title.

That is why I decided to build a proper roadmap myself. Over the last years, I’ve worked on multiple AI projects in industry, and I’ve seen exactly what companies expect from a real AI engineer.

The [Full Notion Roadmap] is simply a reflection of that. Just the practical steps that match the work.

Let’s go.

What AI engineering really means

AI engineering is a builder role.

You are not training models, designing networks, or doing research. Companies already have strong models available. Your job is to take these models and turn them into something useful inside the business.

That means connecting the model to company data, integrating it with internal tools and workflows, and giving people a clean way to use it. And while doing that, you make sure the system is safe, fast, and reliable.

This is why AI engineering feels closer to data engineering and backend work than to data science. Once you see it this way, the whole roadmap becomes clear.

AI Engineering Roadmap

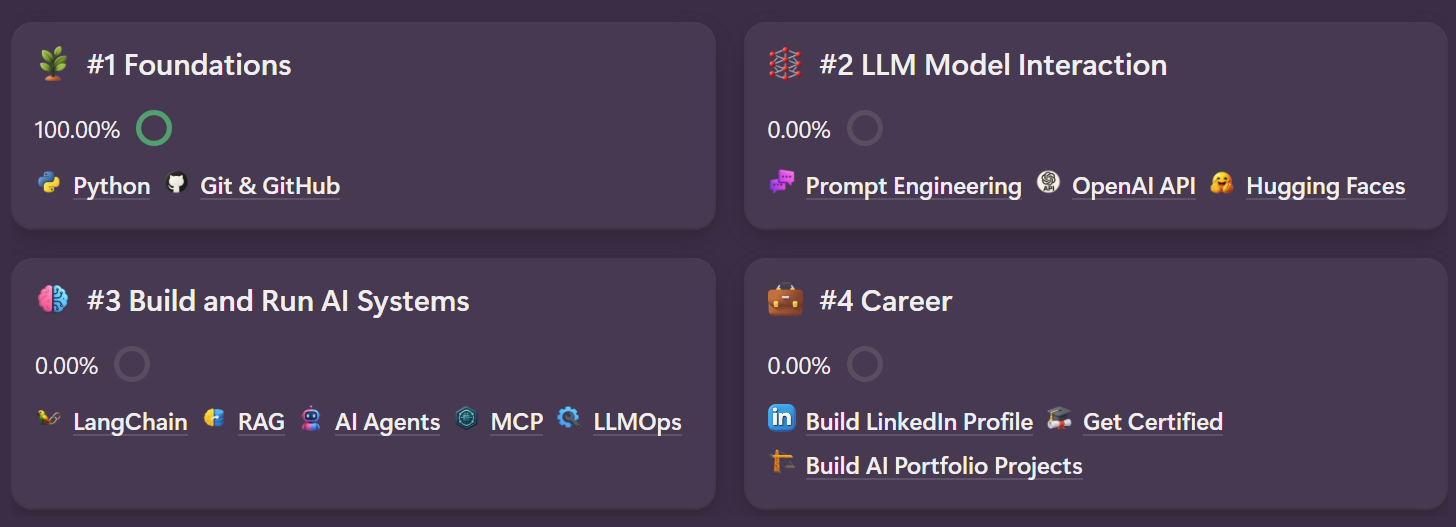

Now that you know what the role actually looks like, here is the path I recommend. These are the phases that will take you from the basics to building real AI systems, and I’ve split everything into four clear phases. You can open the full Notion roadmap here: [LINK]

🌱 #1 Foundations

Learn Python and Git GitHub so you can build a solid technical base and write code with confidence.🧠 #2 LLM Model Interaction

Master prompting and APIs like OpenAI and Hugging Face so you can talk to models, control them, and integrate them into your own code.🧩 #3 Build and Run AI Systems

Learn LangChain, RAG, AI agents, MCP, and basic LLMOps so you can build complete AI systems that understand your data and run reliably.💼 #4 Career

Build strong portfolio projects, upgrade your LinkedIn profile, and earn certifications so you can stand out in today’s competitive market.

let’s deep dive into each phase.

#1 The Foundations (Python and Git)

Before you build anything with AI, you need a simple foundation. And that foundation is Python.

Almost every tool, model, and workflow in AI runs on Python. You do not need to master every corner of the language, but you should feel comfortable with the essentials. Lists and dictionaries, loops, functions, working with JSON, and making API requests. These few things show up in almost every AI project.

Once you start writing code, you also need a place to store it and manage it. That is where Git and GitHub come in. Create a repo, push your work, write clear commit messages, and use branches when you try new ideas. It keeps your work organized and makes you look professional when you apply for jobs.

With Python and Git in place, you have everything you need to start building real AI projects.

#2 LLM Model Interaction

Once you have the basics in place, the next step is learning how to work directly with AI models. This is where AI engineering really begins, because now you move from writing normal code to controlling intelligent systems.

Prompt Engineering

Prompt engineering is the part most people misunderstand. It is not typing a short phrase into a chat window and hoping for a good answer.

It is clear communication.

It is giving context, describing the task, setting the role, and showing examples of what you want.

Good prompts make the model stable and predictable. Weak prompts make the model chaotic.

This skill becomes the backbone of every AI workflow you will build later.

OpenAI API

After you understand prompts, the next step is learning how to call models through an API [LINK].

This is when you stop “using AI” and start “building with AI”.

Calling a model from Python lets you integrate AI into your own tools, scripts, and applications.

You send a structured request, receive a response, and use that output anywhere you want.

It is a small technical step, but it opens the door to real engineering work.